BitNet은 Microsoft가 개발한 언어 모델로, 기존의 고성능 그래픽처리장치(GPU) 없이도 일반 중앙처리장치(CPU)에서 원활히 작동할 수 있도록 설계된 초경량 및 저전력 대규모 언어 모델(LLM)이다.

기존의 16비트 또는 32비트 부동소수점 대신 -1, 0, +1 세 가지 값만을 사용하여 각 파라미터를 표현하는데, 이 덕분에 메모리 점유와 전력 소모를 크게 줄였다.

특히 BitNet b1.58은 초경량 대형 언어 모델(LLM)로, CPU 기반 장치에서도 효율적으로 실행할 수 있도록 설계된 모델이다.

이렇기 때문에, 거의 대부분의 컴퓨터, 특히 Raspberry Pi에서도 설정만 잘 해주면 무리 없이 구동할 수 있다.

Raspberry Pi 5 모델 기준으로 설명한다. 별 의미는 없다.

소스코드로부터 빌드하기

# 빌드 패키지 설치

sudo apt update && sudo apt install -y \

python3-pip python3-dev cmake build-essential \

git software-properties-common

# clang 저장소 구성 및 내려받기

wget -O - https://apt.llvm.org/llvm.sh | sudo bash -s 18기본적인 빌드 환경을 구성한다.

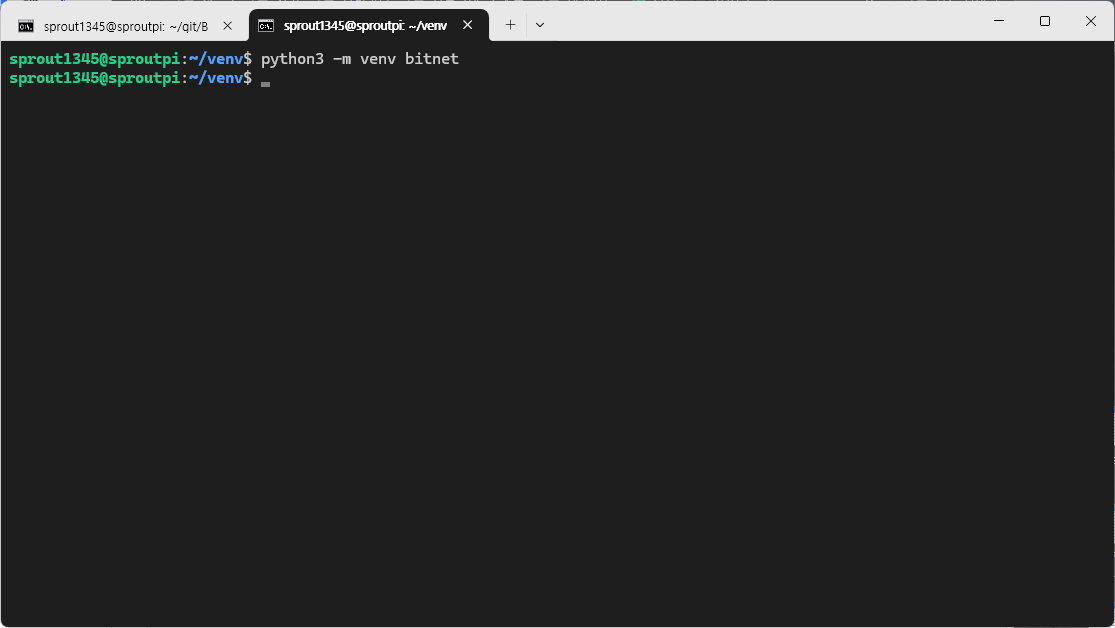

가상환경 구성

python3 -m venv bitnet적당한 곳에 가상환경을 생성한다.

필자는 "~/venv/" 아래에 'bitnet'python3 -m venv bitnet이라는 이름의 가상환경을 구성하였다.

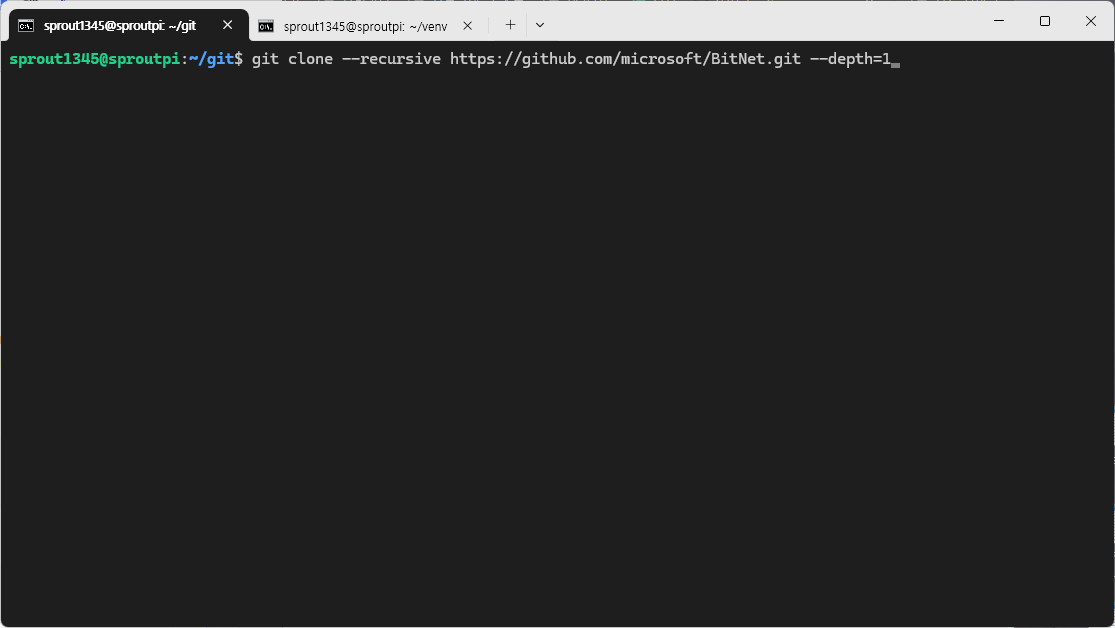

Git clone

# 저장소 클론

git clone --recursive https://github.com/microsoft/BitNet.git

cd BitNet저장소를 클론한다. 가장 최신의 소스만 클론하려면 '--depth=1' 옵션을 준다.

'--recursive' 옵션을 잊지 말자. llama.cpp라는 서드파티 소스를 쓰기 때문에, 이 옵션을 주지 않으면 해당 소스를 클론할 수 없다.

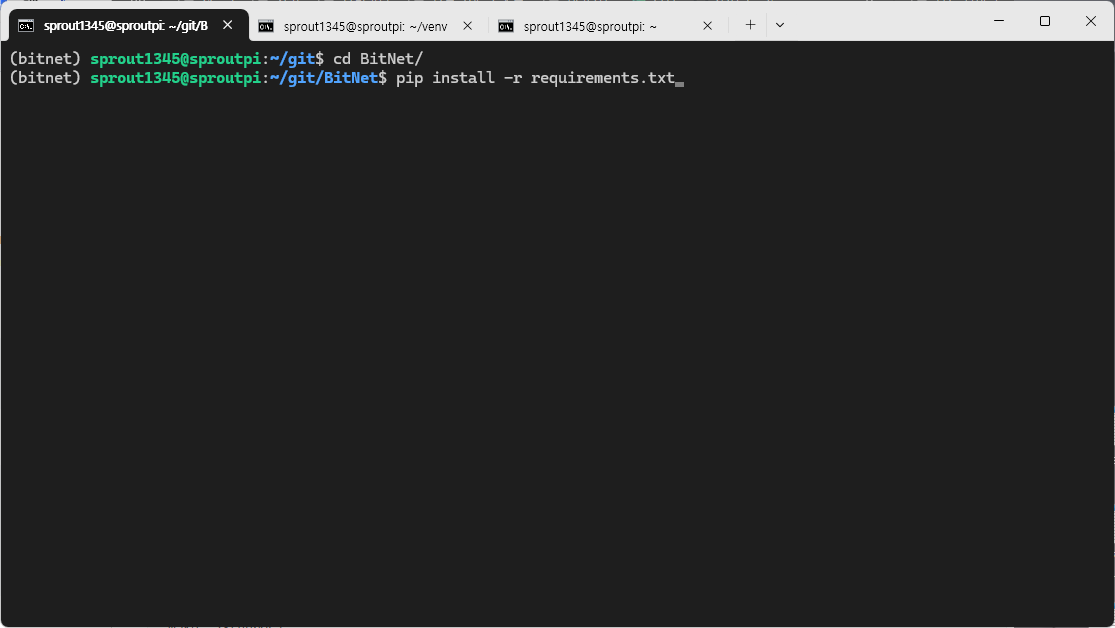

Pip 패키지 설치

# 가상환경 활성화

source ~/venv/bitnet/bin/activate

# pip 패키지 설치

pip install -r requirements.txt방금 만든 Python 가상환경을 활성화하고, requirements.txt에 명시된 패키지를 설치한다.

빌드하기(주의)

# Manually download the model and run with local path

huggingface-cli download microsoft/BitNet-b1.58-2B-4T-gguf --local-dir models/BitNet-b1.58-2B-4T

python setup_env.py -md models/BitNet-b1.58-2B-4T -q i2_s공식 Github에서 안내하는 방법대로 빌드하면 다음과 같은 오류가 발생할 수 있다:

(bitnet) sprout1345@sproutpi:~/git/BitNet$ # Manually download the model and run with local path

huggingface-cli download microsoft/BitNet-b1.58-2B-4T-gguf --local-dir models/BitNet-b1.58-2B-4T

python setup_env.py -md models/BitNet-b1.58-2B-4T -q i2_s

Fetching 3 files: 0%| | 0/3 [00:00<?, ?it/s]Downloading '.gitattributes' to 'models/BitNet-b1.58-2B-4T/.cache/huggingface/download/wPaCkH-WbT7GsmxMKKrNZTV4nSM=.4e3e1a539c8d36087c5f8435e653b7dc694a0da6.incomplete'

Downloading 'ggml-model-i2_s.gguf' to 'models/BitNet-b1.58-2B-4T/.cache/huggingface/download/f28pn7v36EcygdlMWvHpzrkkz6A=.13939ce5030319a35db346e5dba7a3a3bd599dfc18b113a2a97446ff964714c5.incomplete'

Downloading 'README.md' to 'models/BitNet-b1.58-2B-4T/.cache/huggingface/download/Xn7B-BWUGOee2Y6hCZtEhtFu4BE=.c4a6897e03fbb0320ded5e0b686d8a5e1968154c.incomplete'

.gitattributes: 100%|██████████████████████████████████████████████████████████████| 1.64k/1.64k [00:00<00:00, 10.0MB/s]

Download complete. Moving file to models/BitNet-b1.58-2B-4T/.gitattributes | 0.00/1.64k [00:00<?, ?B/s]

README.md: 100%|███████████████████████████████████████████████████████████████████| 8.96k/8.96k [00:00<00:00, 31.9MB/s]

Download complete. Moving file to models/BitNet-b1.58-2B-4T/README.md | 0.00/1.84G [00:00<?, ?B/s]

ggml-model-i2_s.gguf: 100%|████████████████████████████████████████████████████████| 1.84G/1.84G [01:56<00:00, 15.8MB/s]

Download complete. Moving file to models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf███| 1.84G/1.84G [01:56<00:00, 15.8MB/s]

Fetching 3 files: 100%|███████████████████████████████████████████████████████████████████| 3/3 [01:57<00:00, 39.23s/it]

/home/sprout1345/git/BitNet/models/BitNet-b1.58-2B-4T

INFO:root:Compiling the code using CMake.

ERROR:root:Error occurred while running command: Command '['cmake', '--build', 'build', '--config', 'Release']' returned non-zero exit status 2., check details in logs/compile.log

(bitnet) sprout1345@sproutpi:~/git/BitNet$ cat logs/compile.log

[ 1%] Building C object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/ggml.c.o

cc1: warning: command-line option ‘-fpermissive’ is valid for C++/ObjC++ but not for C

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12514:6: warning: no previous prototype for ‘float_act_quant’ [-Wmissing-prototypes]

12514 | void float_act_quant(const int K, float* B, int32_t* dst, float* act_scale) {

| ^~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12530:6: warning: no previous prototype for ‘weight_quant_f32’ [-Wmissing-prototypes]

12530 | void weight_quant_f32(const int M, const int K, float* A, int32_t* dst, float* i2_scale) {

| ^~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c: In function ‘weight_quant_f32’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12545:35: warning: implicit conversion from ‘float’ to ‘double’ to match other operand of binary expression [-Wdouble-promotion]

12545 | dst[i] = (double)A[i] * i2_scale[0] > 0 ? 1 : -1;

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c: At top level:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12550:6: warning: no previous prototype for ‘weight_quant_f16’ [-Wmissing-prototypes]

12550 | void weight_quant_f16(const int M, const int K, uint16_t* A, int32_t* dst, float* i2_scale) {

| ^~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c: In function ‘weight_quant_f16’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12566:37: warning: implicit conversion from ‘float’ to ‘double’ to match other operand of binary expression [-Wdouble-promotion]

12566 | dst[i] = (double)temp_A * i2_scale[0] > 0 ? 1 : -1;

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c: At top level:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12571:6: warning: no previous prototype for ‘matrixMultiply_int’ [-Wmissing-prototypes]

12571 | void matrixMultiply_int(const int M, const int N, const int K, const int32_t* A, const int32_t* B, int32_t* C) {

| ^~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c: In function ‘ggml_compute_forward_mul_mat’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12660:44: warning: initialization of ‘bitnet_float_type *’ {aka ‘float *’} from incompatible pointer type ‘char *’ [-Wincompatible-pointer-types]

12660 | bitnet_float_type * bitnet_f_ptr = wdata;

| ^~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12664:25: warning: pointer targets in initialization of ‘int8_t *’ {aka ‘signed char *’} from ‘char *’ differ in signedness [-Wpointer-sign]

12664 | int8_t * qlut = cur_wdata;

| ^~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12675:42: warning: passing argument 1 of ‘ggml_bitnet_transform_tensor’ discards ‘const’ qualifier from pointer target type [-Wdiscarded-qualifiers]

12675 | ggml_bitnet_transform_tensor(src0);

| ^~~~

In file included from /home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:50:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/ggml-bitnet.h:35:65: note: expected ‘struct ggml_tensor *’ but argument is of type ‘const struct ggml_tensor *’

35 | GGML_API void ggml_bitnet_transform_tensor(struct ggml_tensor * tensor);

| ~~~~~~~~~~~~~~~~~~~~~^~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12679:51: warning: passing argument 2 of ‘ggml_fp32_to_fp16_row’ from incompatible pointer type [-Wincompatible-pointer-types]

12679 | ggml_fp32_to_fp16_row(src1->data, bitnet_f_ptr, ne10 * ne11);

| ^~~~~~~~~~~~

| |

| bitnet_float_type * {aka float *}

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:541:59: note: expected ‘ggml_fp16_t *’ {aka ‘short unsigned int *’} but argument is of type ‘bitnet_float_type *’ {aka ‘float *’}

541 | void ggml_fp32_to_fp16_row(const float * x, ggml_fp16_t * y, int64_t n) {

| ~~~~~~~~~~~~~~^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12722:50: warning: passing argument 1 of ‘ggml_fp16_to_fp32_row’ from incompatible pointer type [-Wincompatible-pointer-types]

12722 | ggml_fp16_to_fp32_row(act_output + dst_offset, (float *) dst->data + dst_offset, ne01 / n_tile_num);

| ~~~~~~~~~~~^~~~~~~~~~~~

| |

| bitnet_float_type * {aka float *}

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:535:48: note: expected ‘const ggml_fp16_t *’ {aka ‘const short unsigned int *’} but argument is of type ‘bitnet_float_type *’ {aka ‘float *’}

535 | void ggml_fp16_to_fp32_row(const ggml_fp16_t * x, float * y, int64_t n) {

| ~~~~~~~~~~~~~~~~~~~~^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12712:23: warning: unused variable ‘qlut_offset’ [-Wunused-variable]

12712 | const int qlut_offset = 0;

| ^~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12702:19: warning: unused variable ‘lut_tile_size’ [-Wunused-variable]

12702 | const int lut_tile_size = lut_size / n_tile_num;

| ^~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12666:29: warning: unused variable ‘lut_biases’ [-Wunused-variable]

12666 | bitnet_float_type * lut_biases = (bitnet_float_type *) (lut_scales + wt->lut_scales_size * ne11);

| ^~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/ggml.c:12653:19: warning: unused variable ‘bits’ [-Wunused-variable]

12653 | const int bits = ggml_bitnet_get_type_bits(type);

| ^~~~

[ 2%] Building C object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/ggml-alloc.c.o

cc1: warning: command-line option ‘-fpermissive’ is valid for C++/ObjC++ but not for C

[ 3%] Building CXX object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/ggml-backend.cpp.o

[ 4%] Building C object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/ggml-quants.c.o

cc1: warning: command-line option ‘-fpermissive’ is valid for C++/ObjC++ but not for C

[ 5%] Building CXX object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/__/__/__/__/src/ggml-bitnet-mad.cpp.o

In file included from /home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-quants.h:4,

from /home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:5:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:154:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

154 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:175:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

175 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:196:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

196 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:261:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

261 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:294:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

294 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:311:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

311 | struct {

| ^

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp: In function ‘size_t quantize_i2_s(const float*, void*, int64_t, int64_t, const float*)’:

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:46:100: warning: unused parameter ‘quant_weights’ [-Wunused-parameter]

46 | size_t quantize_i2_s(const float * src, void * dst, int64_t nrow, int64_t n_per_row, const float * quant_weights) {

| ~~~~~~~~~~~~~~^~~~~~~~~~~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp: In function ‘void ggml_vec_dot_i2_i8_s(int, float*, size_t, const void*, size_t, const void*, size_t, int)’:

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:95:28: warning: cast from type ‘const void*’ to type ‘uint8_t*’ {aka ‘unsigned char*’} casts away qualifiers [-Wcast-qual]

95 | const uint8_t * x = (uint8_t *)vx;

| ^~~~~~~~~~~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:96:28: warning: cast from type ‘const void*’ to type ‘int8_t*’ {aka ‘signed char*’} casts away qualifiers [-Wcast-qual]

96 | const int8_t * y = (int8_t *)vy;

| ^~~~~~~~~~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:94:52: warning: unused parameter ‘bs’ [-Wunused-parameter]

94 | void ggml_vec_dot_i2_i8_s(int n, float * s, size_t bs, const void * vx, size_t bx, const void * vy, size_t by, int nrc) {

| ~~~~~~~^~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:94:80: warning: unused parameter ‘bx’ [-Wunused-parameter]

94 | void ggml_vec_dot_i2_i8_s(int n, float * s, size_t bs, const void * vx, size_t bx, const void * vy, size_t by, int nrc) {

| ~~~~~~~^~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:94:108: warning: unused parameter ‘by’ [-Wunused-parameter]

94 | void ggml_vec_dot_i2_i8_s(int n, float * s, size_t bs, const void * vx, size_t bx, const void * vy, size_t by, int nrc) {

| ~~~~~~~^~

/home/sprout1345/git/BitNet/src/ggml-bitnet-mad.cpp:94:116: warning: unused parameter ‘nrc’ [-Wunused-parameter]

94 | void ggml_vec_dot_i2_i8_s(int n, float * s, size_t bs, const void * vx, size_t bx, const void * vy, size_t by, int nrc) {

| ~~~~^~~

[ 6%] Building CXX object 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/__/__/__/__/src/ggml-bitnet-lut.cpp.o

In file included from /home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:10:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:118: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

118 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:157: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

157 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:193: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

193 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:198: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

198 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:200: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

200 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:205: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

205 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:290: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

290 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:294: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

294 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:311: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

311 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:316: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

316 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:318: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

318 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:323: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

323 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:424: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

424 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:428: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

428 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:445: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

445 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:450: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

450 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:452: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

452 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:457: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

457 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:542: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

542 | #pragma unroll

|

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:546: warning: ignoring ‘#pragma unroll ’ [-Wunknown-pragmas]

546 | #pragma unroll

|

In file included from /home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-quants.h:4,

from /home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:9:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:154:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

154 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:175:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

175 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:196:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

196 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:261:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

261 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:294:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

294 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/./ggml-common.h:311:16: warning: ISO C++ prohibits anonymous structs [-Wpedantic]

311 | struct {

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:24:6: warning: no previous declaration for ‘void per_tensor_quant(int, void*, void*)’ [-Wmissing-declarations]

24 | void per_tensor_quant(int k, void* lut_scales_, void* b_) {{

| ^~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:53:6: warning: no previous declaration for ‘void partial_max_reset(void*)’ [-Wmissing-declarations]

53 | void partial_max_reset(void* lut_scales_) {{

| ^~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: In function ‘void tbl_impl_3200_8640(int32_t*, int8_t*, uint8_t*)’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:190:50: note: use ‘-flax-vector-conversions’ to permit conversions between vectors with differing element types or numbers of subparts

190 | const int8x16_t vec_zero = vdupq_n_s16(0x0000);

| ^

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:190:43: error: cannot convert ‘int16x8_t’ to ‘const int8x16_t’ in initialization

190 | const int8x16_t vec_zero = vdupq_n_s16(0x0000);

| ~~~~~~~~~~~^~~~~~~~

| |

| int16x8_t

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:202:44: error: cannot convert ‘const int8x16_t’ to ‘int16x8_t’

202 | vec_c[i] = vandq_s16(vec_c[i], vec_zero);

| ^~~~~~~~

| |

| const int8x16_t

In file included from /home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/ggml-bitnet.h:7,

from /home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:8:

/usr/lib/gcc/aarch64-linux-gnu/13/include/arm_neon.h:1099:37: note: initializing argument 2 of ‘int16x8_t vandq_s16(int16x8_t, int16x8_t)’

1099 | vandq_s16 (int16x8_t __a, int16x8_t __b)

| ~~~~~~~~~~^~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:217:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

217 | vec_c[0] += vec_v_left_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:217:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:218:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

218 | vec_c[0] += vec_v_right_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:218:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:219:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

219 | vec_c[1] += vec_v_left_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:219:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:220:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

220 | vec_c[1] += vec_v_right_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:220:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:231:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

231 | vec_c[0] += vec_v_left_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:231:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:232:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

232 | vec_c[0] += vec_v_right_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:232:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:233:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

233 | vec_c[1] += vec_v_left_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:233:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:234:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

234 | vec_c[1] += vec_v_right_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:234:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:245:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

245 | vec_c[2] += vec_v_left_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:245:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:246:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

246 | vec_c[2] += vec_v_right_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:246:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:247:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

247 | vec_c[3] += vec_v_left_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:247:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:248:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

248 | vec_c[3] += vec_v_right_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:248:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:259:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

259 | vec_c[2] += vec_v_left_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:259:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:260:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

260 | vec_c[2] += vec_v_right_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:260:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:261:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

261 | vec_c[3] += vec_v_left_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:261:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:262:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

262 | vec_c[3] += vec_v_right_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:262:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: At global scope:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:287:9: warning: no previous declaration for ‘int32_t qgemm_lut_3200_8640(void*, void*, void*, void*, void*)’ [-Wmissing-declarations]

287 | int32_t qgemm_lut_3200_8640(void* A, void* LUT, void* Scales, void* LUT_Scales, void* C) {

| ^~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: In function ‘void tbl_impl_3200_3200(int32_t*, int8_t*, uint8_t*)’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:308:43: error: cannot convert ‘int16x8_t’ to ‘const int8x16_t’ in initialization

308 | const int8x16_t vec_zero = vdupq_n_s16(0x0000);

| ~~~~~~~~~~~^~~~~~~~

| |

| int16x8_t

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:320:44: error: cannot convert ‘const int8x16_t’ to ‘int16x8_t’

320 | vec_c[i] = vandq_s16(vec_c[i], vec_zero);

| ^~~~~~~~

| |

| const int8x16_t

/usr/lib/gcc/aarch64-linux-gnu/13/include/arm_neon.h:1099:37: note: initializing argument 2 of ‘int16x8_t vandq_s16(int16x8_t, int16x8_t)’

1099 | vandq_s16 (int16x8_t __a, int16x8_t __b)

| ~~~~~~~~~~^~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:335:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

335 | vec_c[0] += vec_v_left_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:335:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:336:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

336 | vec_c[0] += vec_v_right_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:336:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:337:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

337 | vec_c[1] += vec_v_left_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:337:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:338:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

338 | vec_c[1] += vec_v_right_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:338:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:349:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

349 | vec_c[2] += vec_v_left_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:349:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:350:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

350 | vec_c[2] += vec_v_right_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:350:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:351:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

351 | vec_c[3] += vec_v_left_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:351:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:352:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

352 | vec_c[3] += vec_v_right_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:352:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:363:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

363 | vec_c[4] += vec_v_left_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:363:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:364:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

364 | vec_c[4] += vec_v_right_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:364:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:365:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

365 | vec_c[5] += vec_v_left_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:365:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:366:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

366 | vec_c[5] += vec_v_right_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:366:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:377:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

377 | vec_c[6] += vec_v_left_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:377:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:378:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

378 | vec_c[6] += vec_v_right_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:378:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:379:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

379 | vec_c[7] += vec_v_left_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:379:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:380:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

380 | vec_c[7] += vec_v_right_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:380:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: At global scope:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:421:9: warning: no previous declaration for ‘int32_t qgemm_lut_3200_3200(void*, void*, void*, void*, void*)’ [-Wmissing-declarations]

421 | int32_t qgemm_lut_3200_3200(void* A, void* LUT, void* Scales, void* LUT_Scales, void* C) {

| ^~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: In function ‘void tbl_impl_8640_3200(int32_t*, int8_t*, uint8_t*)’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:442:43: error: cannot convert ‘int16x8_t’ to ‘const int8x16_t’ in initialization

442 | const int8x16_t vec_zero = vdupq_n_s16(0x0000);

| ~~~~~~~~~~~^~~~~~~~

| |

| int16x8_t

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:454:44: error: cannot convert ‘const int8x16_t’ to ‘int16x8_t’

454 | vec_c[i] = vandq_s16(vec_c[i], vec_zero);

| ^~~~~~~~

| |

| const int8x16_t

/usr/lib/gcc/aarch64-linux-gnu/13/include/arm_neon.h:1099:37: note: initializing argument 2 of ‘int16x8_t vandq_s16(int16x8_t, int16x8_t)’

1099 | vandq_s16 (int16x8_t __a, int16x8_t __b)

| ~~~~~~~~~~^~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:469:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

469 | vec_c[0] += vec_v_left_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:469:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:470:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

470 | vec_c[0] += vec_v_right_0.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:470:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:471:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

471 | vec_c[1] += vec_v_left_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:471:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:472:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

472 | vec_c[1] += vec_v_right_0.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:472:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:483:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

483 | vec_c[0] += vec_v_left_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:483:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:484:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

484 | vec_c[0] += vec_v_right_1.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:484:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:485:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

485 | vec_c[1] += vec_v_left_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:485:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:486:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

486 | vec_c[1] += vec_v_right_1.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:486:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:497:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

497 | vec_c[2] += vec_v_left_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:497:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:498:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

498 | vec_c[2] += vec_v_right_2.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:498:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:499:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

499 | vec_c[3] += vec_v_left_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:499:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:500:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

500 | vec_c[3] += vec_v_right_2.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:500:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:511:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

511 | vec_c[2] += vec_v_left_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:511:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:512:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

512 | vec_c[2] += vec_v_right_3.val[0];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:512:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:513:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

513 | vec_c[3] += vec_v_left_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:513:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:514:22: error: invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)

514 | vec_c[3] += vec_v_right_3.val[1];

| ~~~~~~~~~^~~~~~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:514:22: note: in evaluation of ‘operator+=(int16x8_t, __Int8x16_t)’

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: At global scope:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:539:9: warning: no previous declaration for ‘int32_t qgemm_lut_8640_3200(void*, void*, void*, void*, void*)’ [-Wmissing-declarations]

539 | int32_t qgemm_lut_8640_3200(void* A, void* LUT, void* Scales, void* LUT_Scales, void* C) {

| ^~~~~~~~~~~~~~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: In function ‘void ggml_bitnet_transform_tensor(ggml_tensor*)’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:584:54: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

584 | if (!(is_type_supported(tensor->type) && tensor->backend == GGML_BACKEND_TYPE_CPU && tensor->extra == nullptr)) {

| ^~~~~~~

In file included from /home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/ggml-bitnet.h:3:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:584:54: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

584 | if (!(is_type_supported(tensor->type) && tensor->backend == GGML_BACKEND_TYPE_CPU && tensor->extra == nullptr)) {

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:584:54: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

584 | if (!(is_type_supported(tensor->type) && tensor->backend == GGML_BACKEND_TYPE_CPU && tensor->extra == nullptr)) {

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:591:15: warning: unused variable ‘scales_size’ [-Wunused-variable]

591 | const int scales_size = 1;

| ^~~~~~~~~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp: In function ‘bool ggml_bitnet_can_mul_mat(const ggml_tensor*, const ggml_tensor*, const ggml_tensor*)’:

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:62:15: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

62 | src0->backend == GGML_BACKEND_TYPE_CPU) {

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:62:15: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

62 | src0->backend == GGML_BACKEND_TYPE_CPU) {

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:62:15: warning: ‘ggml_tensor::backend’ is deprecated: use the buffer type to find the storage location of the tensor [-Wdeprecated-declarations]

62 | src0->backend == GGML_BACKEND_TYPE_CPU) {

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:585:48: note: declared here

585 | GGML_DEPRECATED(enum ggml_backend_type backend, "use the buffer type to find the storage location of the tensor");

| ^~~~~~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../include/ggml.h:192:41: note: in definition of macro ‘GGML_DEPRECATED’

192 | # define GGML_DEPRECATED(func, hint) func __attribute__((deprecated(hint)))

| ^~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp: In function ‘size_t ggml_bitnet_mul_mat_get_wsize(const ggml_tensor*, const ggml_tensor*, const ggml_tensor*)’:

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:74:15: warning: unused variable ‘bits’ [-Wunused-variable]

74 | const int bits = ggml_bitnet_get_type_bits(src0->type);

| ^~~~

/home/sprout1345/git/BitNet/src/ggml-bitnet-lut.cpp:70:131: warning: unused parameter ‘dst’ [-Wunused-parameter]

70 | size_t ggml_bitnet_mul_mat_get_wsize(const struct ggml_tensor * src0, const struct ggml_tensor * src1, const struct ggml_tensor * dst) {

| ~~~~~~~~~~~~~~~~~~~~~~~~~~~^~~

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h: In function ‘void* aligned_malloc(size_t)’:

/home/sprout1345/git/BitNet/3rdparty/llama.cpp/ggml/src/../../../../include/bitnet-lut-kernels.h:12:19: warning: ignoring return value of ‘int posix_memalign(void**, size_t, size_t)’ declared with attribute ‘warn_unused_result’ [-Wunused-result]

12 | posix_memalign(&ptr, 64, size);

| ~~~~~~~~~~~~~~^~~~~~~~~~~~~~~~

gmake[2]: *** [3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/build.make:146: 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/__/__/__/__/src/ggml-bitnet-lut.cpp.o] Error 1

gmake[1]: *** [CMakeFiles/Makefile2:759: 3rdparty/llama.cpp/ggml/src/CMakeFiles/ggml.dir/all] Error 2

gmake: *** [Makefile:136: all] Error 2대충 설명하면, x86에서는 문제가 없지만, ARM 환경에서 벡터 타입 간 변환을 할 때, 타입이 완벽히 맞지 않으면 오류를 내는데, 이게 라즈베리 파이 환경에서 문제를 일으키는 것이다.

실제 오류 로그를 살펴보면, <lut-kernels.h> 에서 "invalid operands to binary + (have ‘int16x8_t’ and ‘__Int8x16_t’)" 어쩌구 하면서 문제가 반복적으로 일어나는 것을 확인할 수 있다.

이를 해결하려면 다른 방법을 사용해야 한다.

먼저 직접 코드 생성 스크립트를 실행하여 include/bitnet-lut-kernels.h 및 include/kernel_config.ini를 생성한다.

python utils/codegen_tl1.py \

--model bitnet_b1_58-3B \

--BM 160,320,320 \

--BK 64,128,64 \

--bm 32,64,32본디 이 작업은 'setup_env.py' 실행 시 자동으로 수행되지만, 해당 방법대로 하면 알아서 빌드하며 문제가 생기기 때문에 이렇게 수동으로 해주어야 한다.

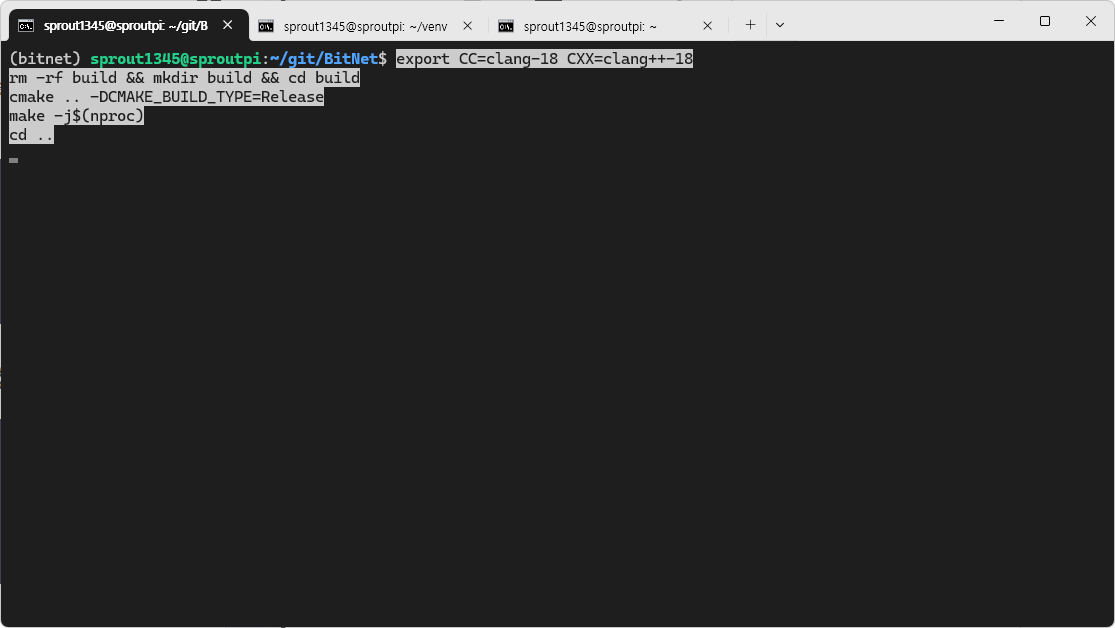

이후 Clang으로 빌드한다.

export CC=clang-18 CXX=clang++-18

rm -rf build && mkdir build && cd build

cmake .. -DCMAKE_BUILD_TYPE=Release

make -j$(nproc)

cd ..실행하기

이제 실행한다.

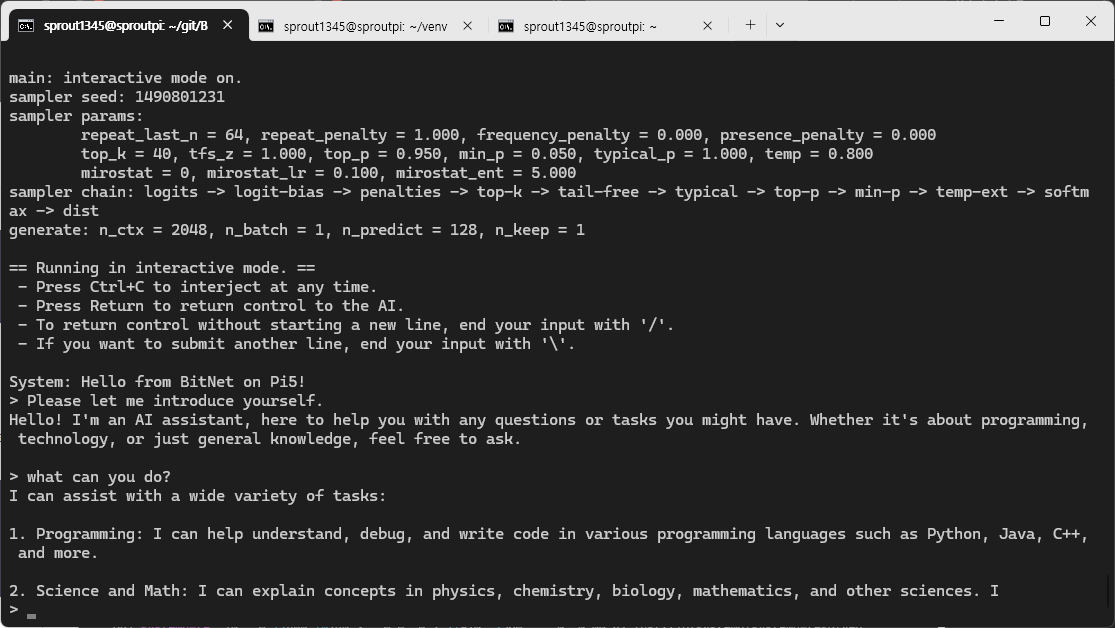

python run_inference.py \

-m models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf \

-p "Hello from BitNet on Pi5!" -cnv위의 명령을 실행하면 "Hello from BitNet on Pi5!"라는 주제를 가진 시스템 프롬프트를 사용하는 대화형 환경이 구성된다.

대화를 나누면, 확실히 ollama에서 구동되는 GPU에서 구동하도록 설게된 기존의 언어 모델보다 리소스를 덜 사용하는 것을 확인할 수 있다.

llama3.2:1b 모델과 비교해보면, 상대적으로 화자의 말을 잘 알아들으면서, 조금 더 최신의 정보까지 가지고 있는 것으로 보인다.

python run_inference.py \

-m models/BitNet-b1.58-2B-4T/ggml-model-i2_s.gguf \

-p "Hello from BitNet on Pi5!" \

-cnv -t 4 -c 2048이런 식으로 명령을 구성하면, 4개의 CPU 코어를 모두 병렬로 사용하며, 최대 2048 프롬프트 컨텍스트 길이를 허용하도록 할 수 있다.

usage: run_inference.py [-h] [-m MODEL] [-n N_PREDICT] -p PROMPT [-t THREADS] [-c CTX_SIZE] [-temp TEMPERATURE] [-cnv]

Run inference

optional arguments:

-h, --help show this help message and exit

-m MODEL, --model MODEL

Path to model file

-n N_PREDICT, --n-predict N_PREDICT

Number of tokens to predict when generating text

-p PROMPT, --prompt PROMPT

Prompt to generate text from

-t THREADS, --threads THREADS

Number of threads to use

-c CTX_SIZE, --ctx-size CTX_SIZE

Size of the prompt context

-temp TEMPERATURE, --temperature TEMPERATURE

Temperature, a hyperparameter that controls the randomness of the generated text

-cnv, --conversation Whether to enable chat mode or not (for instruct models.)

(When this option is turned on, the prompt specified by -p will be used as the system prompt.)전체 옵션은 위와 같은데, 중간에 자꾸 말이 끊긴다면 <-n> 옵션을 조정하면 된다. 출력 토큰의 길이를 조절하는 옵션이다.

참고 자료

GitHub - microsoft/BitNet: Official inference framework for 1-bit LLMs

Official inference framework for 1-bit LLMs. Contribute to microsoft/BitNet development by creating an account on GitHub.

github.com

https://www.eddieoz.com/building-bitnet-on-raspberry-pi-5-arm64-2/

Building BitNet on a Raspberry Pi 5 (arm64)

Solving compiling errors when building BitNet on RaspberryPi 5 (arm64)

www.eddieoz.com

https://www.bijanbowen.com/bitnet-b1-58-on-raspberry-pi-4b/

BitNet b1.58 on Raspberry Pi 4B

BitNet RPi 4B Setup Guide Pre-Requisites This install guide has been tested on a Raspberry Pi 4B 2GB ram, using the Raspberry Pi OS (64-bit) Released 2024-11-19 1. Install system tools sudo apt update && sudo apt install -y \ python3-pip python3-dev cmake

www.bijanbowen.com

'Raspberry Pi' 카테고리의 다른 글

| Raspberry Pi #10 - Arduino CLI를 이용한 Arduino 프로그래밍 (3) | 2025.08.01 |

|---|---|

| Raspberry Pi #9 - Raspberry Pi에 저장된 네트워크 연결 정보 변경하기 (0) | 2025.07.01 |

| Raspberry Pi #7 - 시간 동기화 설정 (0) | 2025.05.01 |

| Raspberry Pi #6 - wiringPi 라이브러리를 이용한 핀 제어(C/C++) (1) | 2025.04.01 |

| Raspberry Pi #5 - gpiozero 라이브러리를 이용한 핀 제어 (0) | 2025.03.01 |

댓글